Count the scaler#

From APS Python Training for Bluesky Data Acquisition.

Objective

In this notebook, we show how to count the scaler:

on the command line

using ophyd

using the bluesky RunEngine

We also show how to:

set the counting time

choose certain channels for data acquisition

Start the instrument package#

Our instrument package is in the bluesky subdirectory here so we add that to the search path before importing it.

[1]:

import pathlib, sys

sys.path.append(str(pathlib.Path.home() / "bluesky"))

from instrument.collection import *

/home/prjemian/bluesky/instrument/_iconfig.py

Activating auto-logging. Current session state plus future input saved.

Filename : /home/prjemian/Documents/projects/BCDA-APS/bluesky_training/docs/source/howto/.logs/ipython_console.log

Mode : rotate

Output logging : True

Raw input log : False

Timestamping : True

State : active

I Thu-13:07:12 - ############################################################ startup

I Thu-13:07:12 - logging started

I Thu-13:07:12 - logging level = 10

I Thu-13:07:12 - /home/prjemian/bluesky/instrument/session_logs.py

I Thu-13:07:12 - /home/prjemian/bluesky/instrument/collection.py

I Thu-13:07:12 - CONDA_PREFIX = /home/prjemian/.conda/envs/bluesky_2023_2

Exception reporting mode: Minimal

I Thu-13:07:12 - xmode exception level: 'Minimal'

I Thu-13:07:12 - /home/prjemian/bluesky/instrument/mpl/notebook.py

I Thu-13:07:12 - #### Bluesky Framework ####

I Thu-13:07:12 - /home/prjemian/bluesky/instrument/framework/check_python.py

I Thu-13:07:12 - /home/prjemian/bluesky/instrument/framework/check_bluesky.py

I Thu-13:07:12 - /home/prjemian/bluesky/instrument/framework/initialize.py

I Thu-13:07:12 - RunEngine metadata saved in directory: /home/prjemian/Bluesky_RunEngine_md

I Thu-13:07:12 - using databroker catalog 'training'

I Thu-13:07:12 - using ophyd control layer: pyepics

I Thu-13:07:12 - /home/prjemian/bluesky/instrument/framework/metadata.py

I Thu-13:07:12 - /home/prjemian/bluesky/instrument/epics_signal_config.py

I Thu-13:07:12 - Using RunEngine metadata for scan_id

I Thu-13:07:12 - #### Devices ####

I Thu-13:07:12 - /home/prjemian/bluesky/instrument/devices/area_detector.py

I Thu-13:07:12 - /home/prjemian/bluesky/instrument/devices/calculation_records.py

I Thu-13:07:14 - /home/prjemian/bluesky/instrument/devices/fourc_diffractometer.py

I Thu-13:07:15 - /home/prjemian/bluesky/instrument/devices/ioc_stats.py

I Thu-13:07:15 - /home/prjemian/bluesky/instrument/devices/kohzu_monochromator.py

I Thu-13:07:15 - /home/prjemian/bluesky/instrument/devices/motors.py

I Thu-13:07:15 - /home/prjemian/bluesky/instrument/devices/noisy_detector.py

I Thu-13:07:15 - /home/prjemian/bluesky/instrument/devices/scaler.py

I Thu-13:07:16 - /home/prjemian/bluesky/instrument/devices/shutter_simulator.py

I Thu-13:07:16 - /home/prjemian/bluesky/instrument/devices/simulated_fourc.py

I Thu-13:07:16 - /home/prjemian/bluesky/instrument/devices/simulated_kappa.py

I Thu-13:07:16 - /home/prjemian/bluesky/instrument/devices/slits.py

I Thu-13:07:16 - /home/prjemian/bluesky/instrument/devices/sixc_diffractometer.py

I Thu-13:07:16 - /home/prjemian/bluesky/instrument/devices/temperature_signal.py

I Thu-13:07:16 - #### Callbacks ####

I Thu-13:07:16 - /home/prjemian/bluesky/instrument/callbacks/spec_data_file_writer.py

I Thu-13:07:16 - #### Plans ####

I Thu-13:07:16 - /home/prjemian/bluesky/instrument/plans/lup_plan.py

I Thu-13:07:16 - /home/prjemian/bluesky/instrument/plans/peak_finder_example.py

I Thu-13:07:16 - /home/prjemian/bluesky/instrument/utils/image_analysis.py

I Thu-13:07:16 - #### Utilities ####

I Thu-13:07:16 - writing to SPEC file: /home/prjemian/Documents/projects/BCDA-APS/bluesky_training/docs/source/howto/20230413-130716.dat

I Thu-13:07:16 - >>>> Using default SPEC file name <<<<

I Thu-13:07:16 - file will be created when bluesky ends its next scan

I Thu-13:07:16 - to change SPEC file, use command: newSpecFile('title')

I Thu-13:07:16 - #### Startup is complete. ####

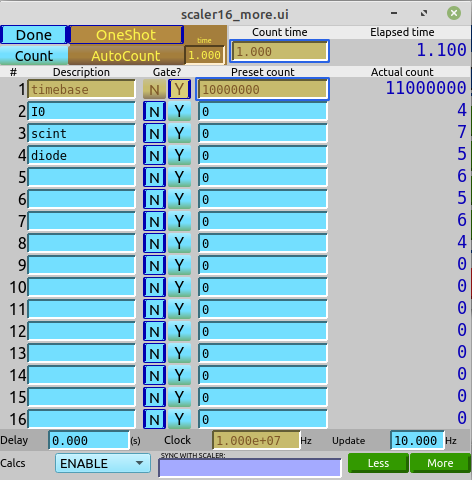

A closer look at the EPICS scaler#

A scaler is a device that counts digital pulses from a pulse detector such as a scintillation counter or from photodiodes or ionization chamber with pulse chain electronics. Scalers have many channels, some of which might have no associated detector. Our scaler (scaler1) is a simulated device that records a random number of pulses in each channel. We are only interested in the channels that have names provided by users in the GUI screens. In this control screen for our scaler, only a few of

the channels are named:

Let’s configure scaler1 to report only the scint and I0 channels (plus the count time channel which will always be included). Keep in mind that the argument to this function is a Python list, so the channel names must be enclosed with []. The function does not return a result. If something is printed, there is an error to be fixed.

[2]:

scaler1.select_channels(["scint", "I0"])

The easiest way to count the scaler object is to use the %ct bluesky magic command, which counts all objects with the detectors label.

Note that the various magic commands are only available from the command line, not for use in a bluesky plan function.

[3]:

%ct

[This data will not be saved. Use the RunEngine to collect data.]

noisy 0.0

I0 7.0

scint 9.0

scaler1_time 1.6

Compare with the reading when all channels are selected.

NOTE: To report all named channels, call .select_channels() with empty parentheses.

[4]:

scaler1.select_channels()

%ct

[This data will not be saved. Use the RunEngine to collect data.]

noisy 0.0

timebase 16000000.0

I0 8.0

scint 7.0

I000 8.0

I00 7.0

roi1 8.0

scaler1_time 1.6

Now, select just the two channels again before continuing:

[5]:

scaler1.select_channels(["scint", "I0"])

As noted before, the %ct command is only available from the command shell.

use ophyd to count the scaler#

We should learn how to use the underlying Python code to do the same steps.

The first step is to use pure ophyd methods to count and report, then use a bluesky plan to do the same thing. The ophyd methods are trigger, wait, and read. The trigger and wait methods can be chained together:

[6]:

scaler1.trigger().wait()

Technically, we should stage and unstage the object. We’ll use staging to control the count time of the scaler.

The ophyd `.stage() method <https://blueskyproject.io/ophyd/generated/ophyd.device.BlueskyInterface.stage.html?highlight=stage#ophyd.device.BlueskyInterface.stage>`__ prepares the object for its .trigger() method, while the .unstage() method returns the object’s settings to the previous state before the .stage() method was called.

[7]:

scaler1.stage()

scaler1.trigger().wait()

scaler1.unstage()

[7]:

[Channels(prefix='gp:scaler1', name='scaler1_channels', parent='scaler1', read_attrs=['chan02', 'chan02.s', 'chan04', 'chan04.s'], configuration_attrs=['chan02', 'chan02.chname', 'chan02.preset', 'chan02.gate', 'chan04', 'chan04.chname', 'chan04.preset', 'chan04.gate']),

ScalerCH(prefix='gp:scaler1', name='scaler1', read_attrs=['channels', 'channels.chan02', 'channels.chan02.s', 'channels.chan04', 'channels.chan04.s', 'time'], configuration_attrs=['channels', 'channels.chan02', 'channels.chan02.chname', 'channels.chan02.preset', 'channels.chan02.gate', 'channels.chan04', 'channels.chan04.chname', 'channels.chan04.preset', 'channels.chan04.gate', 'count_mode', 'delay', 'auto_count_delay', 'freq', 'preset_time', 'auto_count_time', 'egu'])]

Let’s find out what happens when scaler1 is staged. That’s controlled by the contents of a Python dictionary .stage_sigs:

[8]:

scaler1.stage_sigs

[8]:

OrderedDict()

It’s empty, so nothing has been preconfigured for us. Let’s make sure that we get to pick the counting time (the time to accumulate pulses in the various channels), say 2.0 seconds, when we count here.

[9]:

scaler1.stage_sigs["preset_time"] = 2

scaler1.stage_sigs

[9]:

OrderedDict([('preset_time', 2)])

Show the counting time before we count, then stage, trigger, wait, read, unstage, then finally show the counting time after we count:

[10]:

print(f"Scaler configured to count for {scaler1.preset_time.get()}s")

scaler1.stage()

scaler1.trigger().wait()

print(scaler1.read())

scaler1.unstage()

print(f"Scaler configured to count for {scaler1.preset_time.get()}s")

Scaler configured to count for 1.5s

OrderedDict([('I0', {'value': 12.0, 'timestamp': 1681409246.542347}), ('scint', {'value': 10.0, 'timestamp': 1681409246.542347}), ('scaler1_time', {'value': 2.1, 'timestamp': 1681409244.179794})])

Scaler configured to count for 1.5s

The report from .read() includes both values and timestamps (in seconds since the Python time epoch, UTC). The structure is a Python dictionary. This is the low-level method used to collect readings from any ophyd device. We had to print() this since the return result from a command within a sequence is not returned at the end of the sequence, just the return result of the final command in the sequence.

See that the scaler counted for 2.1 seconds (a small bug in the scaler simulator it seems, always adds .1 to the count time!). But before staging, the scaler was configured for 1.0 seconds, and after unstaging, the scaler returned to that value.

That’s how to control the counting time for a scaler. (Area detectors use different terms. More on that later.)

about scaler1_time

Of note is the key scaler1_time which is the name of the ophyd symbol scaler1.time as returned by scaler1.time.name:

In [21]: scaler1.time.name

Out [21]: 'scaler1_time'

use bluesky (the package) to count the scaler#

Now, use the bluesky RunEngine (RE) to count scaler1. We’ll use the bluesky plan (bp) called count(). To be consistent with the result returned from %ct, we’ll include the

noisy detector.

[11]:

RE(bp.count([scaler1,noisy]))

Transient Scan ID: 932 Time: 2023-04-13 13:07:26

Persistent Unique Scan ID: 'de519ff4-fb72-4f15-8ee3-d0b11db127da'

New stream: 'label_start_motor'

New stream: 'primary'

+-----------+------------+------------+------------+------------+

| seq_num | time | noisy | I0 | scint |

+-----------+------------+------------+------------+------------+

| 1 | 13:07:28.9 | 0.00000 | 12 | 8 |

+-----------+------------+------------+------------+------------+

generator count ['de519ff4'] (scan num: 932)

[11]:

('de519ff4-fb72-4f15-8ee3-d0b11db127da',)

There are many ways to view data from bluesky runs. The most recent run can be picked from the database using the Python reference for the last item in the list: [-1]. We need the v2 version of the database to access the .primary data stream.

We’ll pick one simple to view the run data, as an xarray table since it is easy to display such structured content in a Jupyter notebook. (We could just as easily have displayed the data as a dask table by replacing .read() with .to_dask().)

[12]:

cat[-1].primary.read()

[12]:

<xarray.Dataset>

Dimensions: (time: 1)

Coordinates:

* time (time) float64 1.681e+09

Data variables:

noisy (time) float64 0.0

I0 (time) float64 12.0

scint (time) float64 8.0

scaler1_time (time) float64 2.1As a last action in this section, use the listruns() command from apstools to show the (default: 20) most recent runs in the database. The table shows a short version of the run’s unique identifier (short_uid), and other more obvious columns of information, truncated to avoid lengthy output. The name of the databroker catalog

(class_2021_03) is shown before the table.

[13]:

listruns()

/home/prjemian/.conda/envs/bluesky_2023_2/lib/python3.10/site-packages/databroker/queries.py:89: PytzUsageWarning: The zone attribute is specific to pytz's interface; please migrate to a new time zone provider. For more details on how to do so, see https://pytz-deprecation-shim.readthedocs.io/en/latest/migration.html

timezone = lz.zone

[13]:

======= =================== ========= ==========================

scan_id time plan_name detectors

======= =================== ========= ==========================

932 2023-04-13 13:07:26 count ['scaler1', 'noisy']

931 2023-04-13 13:06:44 count ['scaler1', 'noisy']

930 2023-04-11 18:48:34 scan ['noisy', 'th_tth_permit']

929 2023-04-11 18:48:09 scan ['noisy', 'th_tth_permit']

928 2023-04-11 18:47:57 scan ['noisy', 'th_tth_permit']

927 2023-04-11 18:47:42 scan ['noisy', 'th_tth_permit']

926 2023-04-11 18:47:33 scan ['noisy', 'th_tth_permit']

925 2023-04-11 18:46:11 scan ['noisy', 'th_tth_permit']

924 2023-04-11 18:45:11 scan ['noisy', 'th_tth_permit']

923 2023-04-11 18:44:49 scan ['noisy', 'th_tth_permit']

922 2023-04-11 18:42:15 scan ['noisy', 'th_tth_permit']

921 2023-04-11 18:41:39 scan ['noisy', 'th_tth_permit']

920 2023-04-11 18:41:23 scan ['noisy', 'th_tth_permit']

919 2023-04-11 18:41:15 scan ['noisy', 'th_tth_permit']

918 2023-04-11 18:40:39 scan ['noisy', 'th_tth_permit']

917 2023-04-11 18:39:11 scan ['noisy', 'th_tth_permit']

916 2023-04-11 18:38:51 scan ['noisy', 'th_tth_permit']

915 2023-04-11 18:38:36 scan ['noisy', 'th_tth_permit']

914 2023-04-11 18:38:27 scan ['noisy', 'th_tth_permit']

913 2023-04-11 18:36:37 scan ['noisy', 'th_tth_permit']

======= =================== ========= ==========================

CA.Client.Exception...............................................

Warning: "Virtual circuit disconnect"

Context: "zap:45763"

Source File: ../cac.cpp line 1237

Current Time: Thu Apr 13 2023 16:55:17.301061478

..................................................................

CA.Client.Exception...............................................

Warning: "Virtual circuit disconnect"

Context: "zap:5064"

Source File: ../cac.cpp line 1237

Current Time: Thu Apr 13 2023 16:55:30.733410696

..................................................................